Historically, visual stimuli in video-based systems have been presented on a computer monitor placed parallel to the frontal plane, and participants have responded by moving joysticks, a mouse, or other devices located within the peripersonal workspace. This information allows researchers to quantify overt mechanisms of visual search by ascertaining if objects of interest have been directly viewed (foveated). Video-based eye trackers can non-invasively obtain information on where a subject is directly looking, commonly referred to as the “point-of-regard (POR)”. This possibility can be realized by adding video-based eye tracking to upper-extremity robots. Ī collateral benefit that has emerged from the development of robotic and virtual reality technologies is the potential to study how perception and cognition contribute to eye-hand coordination. Accordingly, over the last few years there has been a substantial increase in the volume of research combining virtual reality with upper limb robotics.

Rehabilitation robots address a longstanding need to provide greater doses of challenging, adaptable and stimulating activities that can engage clinical populations for sustained periods of time. Assessment robots address a chronic need to obtain objective and quantitative measures of sensory, motor and cognitive function. Our methods can also be used with other video-based or tablet-based systems in which eye movements are performed in a peripersonal plane with variable depth.Ĭommercial availability of robotic devices for neurological assessment and rehabilitation of the upper extremity has increased exponentially since 1998. The proposed methods provide advancements for examining eye movements in robotic and virtual-reality systems. Within the transverse plane, our algorithm reliably differentiates saccades from fixations (static visual stimuli) and smooth pursuits from saccades and fixations when visual stimuli are dynamic.

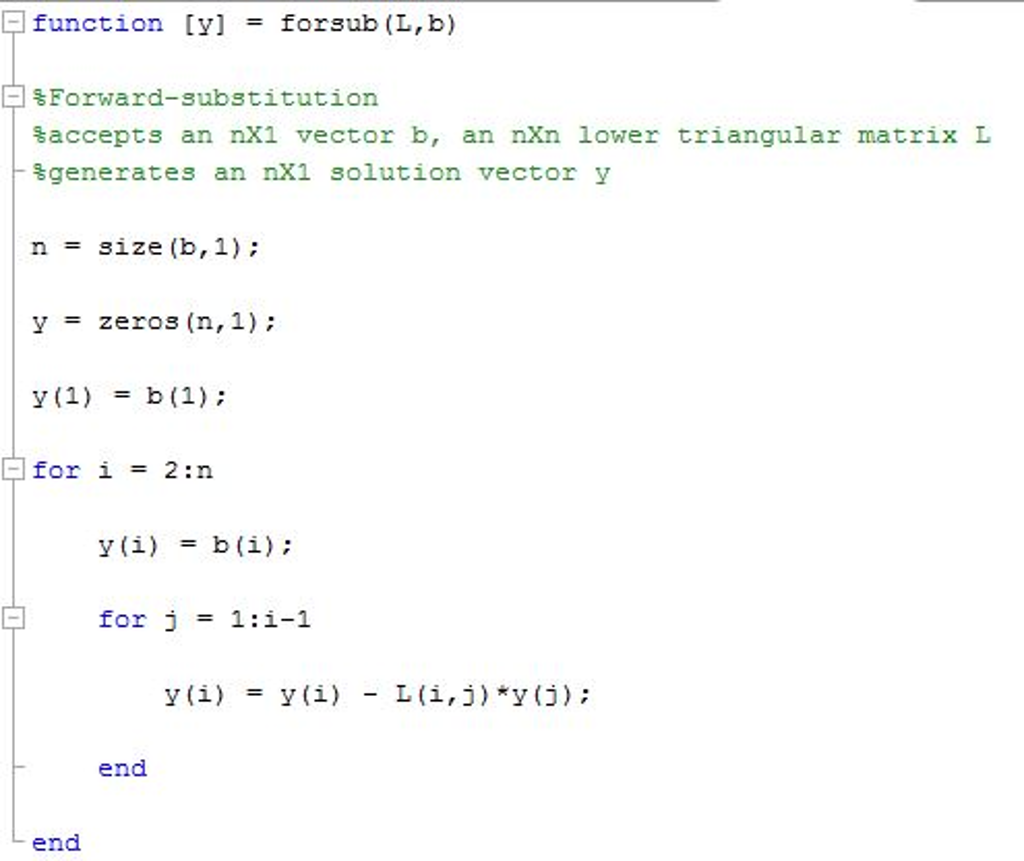

#MATLAB VIDEO TRACKING VELOCITY VECTOR CODE MANUAL#

Finally, we validate our algorithm by comparing the gaze events computed by the algorithm with those obtained from the eye-tracking software and manual digitization. We then use the obtained kinematics to compute velocity-based thresholds that allow us to accurately identify onsets and offsets of fixations, saccades and smooth pursuits. Here we present a geometrical method to compute ocular kinematics from a monocular remote eye tracking system when visual stimuli are presented in the transverse plane. To our knowledge, there are no available methods to classify gaze events in the transverse plane for monocular remote eye tracking systems. transverse plane), eye movements have a vergence component that may influence reliable detection of gaze events (fixations, smooth pursuits and saccades). When visual stimuli are presented at variable depths (e.g.

However, remote eye tracking systems typically compute ocular kinematics by assuming eye movements are made in a plane with constant depth (e.g. Integrating video-based remote eye tracking with robotic and virtual-reality systems can provide an additional tool for investigating how cognitive processes influence visuomotor learning and rehabilitation of the upper extremity. A key feature of these systems is that visual stimuli are often presented within the same workspace as the hands (i.e., peripersonal space). Robotic and virtual-reality systems offer tremendous potential for improving assessment and rehabilitation of neurological disorders affecting the upper extremity.

0 kommentar(er)

0 kommentar(er)